Since this will officially be my first blog post as a Citrix CTP, I would like to start by thanking everybody for your support during the last couple of years and of course for all the congratulations and well wishes I have received since the official announcement last week. It has been a truly wonderful experience! Having said that, let’s get back on topic. This is not going to be a MCS vs. PVS kind of blog post; there are plenty of good ones out there already, instead I will assume that you already choose MCS over PVS. However, while the choice has been made you still have some doubts as to weather MCS is up to the task at hand. Throughout this post I will summarize some things to think about and questions to ask when and before implementing Citrix MCS.

First of all, you choose MCS because it is simple. It’s build right into XenDesktop (note that where I say XenDesktop I also mean XenApp) and you don’t have to build and maintain a separate infrastructure like with PVS. Again, these are your thoughts, not mine, PVS might be worth considering as well.

As of XenDesktop version 7.0 MCS can be used to provision virtual machines based on Server Operating systems as well.

Virtual is the way forward, right?

You decided long ago that virtual is the way forward and that it doesn’t really matter if it’s on-premises or in the cloud since MCS can do both. What it cannot do is provision physical machines, but of course you took that in consideration as well. Hint: Provisioning Services can do both.

There might be circumstances where you would like to be able to assign a few persistent VM’s to some users, and although somewhat cumbersome (this isn’t a very popular approach) MCS can help you with that as well. It will use so-called PvD’s, or Personal vDisks for this, a technology, which is also supported by PVS by the way. Both are out of scope for now.

A quick breakdown

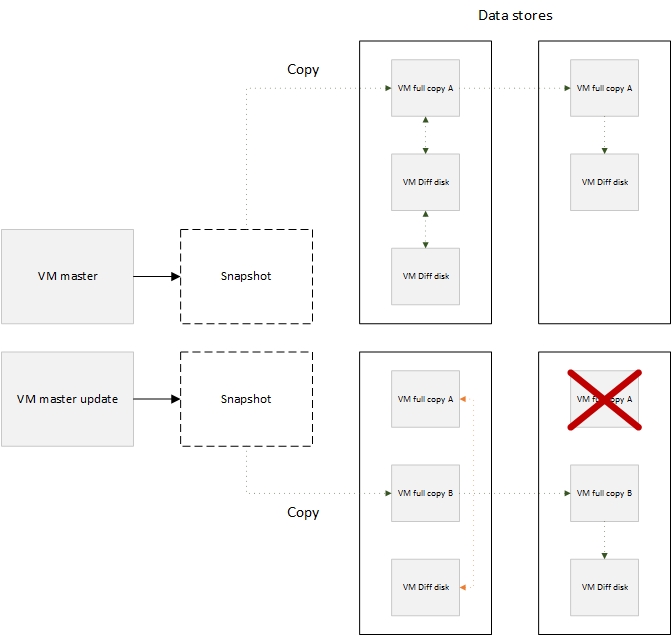

Before we continue it is important to understand what happens under the hood when you use MCS to provision one, or multiple new VM’s. It all starts with a master VM, golden image or whatever you would like to call it. When you create a new Machine Catalog from Studio you will be asked to select this master VM, which will then serve as the base image from where all other VM’s will be provisioned.

It works like this, MCS will first take a snapshot of your master VM (template machine) or you can take one manually, which has the added advantage that you can name it yourself. Next, this ‘template’ machine will be copied over to all data stores known to your Host Connection as configured in Studio. All VM’s that will be created as part of the provisioning process will consist out of a differencing disk and an identity disk, both will be attached to the VM (how this is handled technically slightly differs per hypervisor). The differencing disk is meant to store all changes made to the VM (it functions as a write cache) while the identify disk is meant to give the VM it’s own identity (duh) used within Active Directory for example.

Why does all this matter?

Each time you create a new master VM, or update an existing one for that matter this process repeats itself. Meaning that if you have multiple master VM’s, let’s say two for VDI (windows 7 and 8) and two for RDSH (Windows Server 2008 R2 and 2012 R2) the system will create a snapshot of every master VM, four in this example, which will then need to be copied over to all of the data stores known to your host connection.

As mentioned, when updating an existing master image, the same process is repeated. The system will basically tread the updated master VM as a new master VM and as such a snapshot will again be created and copied over the accompanying data stores accordingly.

For obvious reasons, a few questions that go with this…

- How many master virtual machines will you be managing? Meaning the different types of virtual machines, Windows 7, 8 and so on.

- How many data stores will you be using?

- How many times, per week, month, year will your master VM(s) need to be updated? Though, this will always be a hard question on to answer.

- Does your storage platform support thin provisioning? If not, then every provisioned VM will be as big as the master vm it is based on.

By answering these types of questions you will have at least an indication on the amount of storage needed and the administrative overhead that comes with maintaining your virtual infrastructure based on MCS.

Your workloads

As we all know, virtualising 100% of your application workload is not going to happen any time soon. And even when a large part is virtualized, using App-V for example, applications might pre-cached, meaning you will still need to ‘break open’ the base image they reside on if the application itself needs to be updated, patched etc. in some way. Of course the same applies when applications and/or plug-ins are installed in the base image.

Now imagine managing 4 (or more) different base images (master VM’s), all with a relatively intensive Maintenence cycle perhaps caused by a bunch of ‘home made’ applications that need to be updated on a weekly basis for example. Every week these images, or master VM’s need to be updated at least once, meaning a new snapshot per master image that will need to be copied down to all the participating data stores for each image type, taking up CPU resources, causing a peak in IO and network resource usage. This alone can be a time consuming process depending on the number and size of your images and the amount of data stores involved.

Today technologies like application layering and containerization can help us overcome most of these application related issues, however, the general adoption of these kinds of technologies and products will take some time.

What about rollbacks?

Rollbacks are threated the same way (there is a rollback option available in Studio) in that they are seen as yet another image that is different from the one your VM’s currently rely on. This means that the whole process as described above will repeat itself.

Some more considerations

When a master VM / image is updated, copied over to all data stores etc. and assigned to the appropriate VM’s, these VM’s will first need to be rebooted to be able to make use of the new or updated master VM. While this may sound as a relatively simple process there are some things you need to take into consideration. Here are a few…

- When rebooting a couple hundred of virtual machines (or more) this can have a potential negative impact on your underlying storage platform. Also referred to as a boot storm. This is not something you want to risk during work hours.

- Some companies have very strict policies when it comes to idle and disconnect sessions. For example, there might still be some user sessions in a disconnect state at the time you want to reboot certain VM’s, but when the company policy states that users may not be logged off (forced) unless they do so themselves or automatically when the configured disconnect policy kicks in, you will have to reschedule or reconsider your planned approach. Not that uncommon, trust me.

- All this might also interfere with other processes that might be active during the night for example.

Storage implications

I already mentioned a couple of things to consider with regards to your underlying storage platform when using MCS, but I am pretty sure I can come up with few more so let’s have a(nother) look shall we.

Due note that the below numbers are taken from several tests performed by Citrix.

- MCS is, or at least can be storage intensive with regards to the (read) IOPS needed. On average it will need around 1.6 times more IOPS when compared to PVS for example, again, mainly read traffic from the master VM as mentioned earlier. However… and this is often where the confusion starts, the 1.6 number is based on the overall average meaning that it also takes into account boot and logon storms (that’s also why they are mainly read IOPS). If we primarily focus on the so-called steady state IOPS then it’s closer to 1.2, a big difference right?

- While the above shouldn’t be too big of an issue with all modern storage technologies available today, it’s still something you need to consider.

- The ability to cache most reads is a very welcome storage feature! Don’t be surprised if you end up hitting a read cache ration of 75% of higher. Boosting overall performance.

- Consider having a look at Intellicache, a technology build into XenServer, when deploying non-persistent VM’s on NFS based storage.

- When looking up IOPS recommendations based on Citrix best practice be aware that these are (almost) exclusively based on stead state operations.

- A medium workload (based on the medium Login VSI workload) running on a Windows 7 or 8 virtual machine (provisioned with MCS) will need around 12 IOPS during its steady state with a read/write ration of 20/80. During boot these numbers will be the other way around 80/20.

- Running the same workload on a Server 2012 R2 virtual machine will lead to 9 IOPS during its steady state with a read/write ration of 20/80. Note that this is on a per user basis. And again, during boot this will probably be closer to 80/20 read/write.

- Collect as many (IOPS / workload specific) data as possible and consult with (at least) one or multiple storage administrators at the customers Site, if any. See what they think.

- Remember that there is more to IOPS then just the read / write ratios. Also consider the different types of IOPS like random and sequential, the configured storage block sizes used and the actual throughput available.

- Often multiple data stores are created to spread the overall IOPS load on the underlying storage platform. Think this through. Remember that each time a new master VM is created or one is updated it will need to be copied over to all data stores known to the Host Connection as configured in Citrix Studio.

- Load testing can provide us with some useful and helpful numbers with regards to performance and scale, however, don’t lose track of any potential resource intensive applications that might not be included during some of the standard baseline tests. You don’t want to run into any surprises when going live do you? Include them.

- Whenever possible try to scale for peaks, meaning boot, logon and logoff storms.

- If needed schedule and pre-boot multiple machines, VDI VM’s as well as any virtual XenApp servers, before the majority of your users start their day.

- Try to avoid overcommitting your hosts compute resources as much as possible.

- Failing over VM’s while using MCS isn’t a problem, but if you would like to move the accompanying virtual machine disks / files as well, using Storage vMotion, storage Live Migration and/or storage XenMotion for example, you are out of luck. This is not supported.

- There is a strong dependency between the VM and the Disk ID / datastore it resides on (information stored in the Central Site Database), once broken or interrupted your VM will not be able to boot properly.

- Consider implementing storage technologies and Hyper converged solutions like Nutanix, Atlantis, vSAN, VPLEX etc (there are plenty more out there) enabling you to move around your MCS based VM’s including their disks, either automated or manually, while maintaining the VM Disk ID dependency using and combining features like Shadow Clones, Data Locality, Data synchronizations and so on. Software defined storage is key.

- The ability to thin provisioning the earlier discussed differencing disks would be preferred. This will initially save you a lot of disk space and if your environment isn’t that big < 1000, re-provisioning your VM’s once or twice a month might be optional as well. You might be able to get up to a few hundred machines per hour. This is something that your storage platform will need to support.

- The same applies to compression and de-duplication for example.

- I/O offload for writes and a caching mechanism of some sort for reads are both (really) nice to have’s as well!

- Monitor the growth of your differencing disks and size your storage platform accordingly. Do not forget to include the duplicate images of your master VM copied over to each data store when it comes to free GB’s needed.

- Also, when an image is updated, at least temporarily, there will be two full images / master VM’s residing in each datastore, do not forget to include these into your GB calculations as well.

- As soon as all VM’s within the data store are using the new or updated image the ‘old’ one will be deleted automatically. By default this will happen after a time period of six hours but this is configurable through PowerShell.

- Think about a reboot schedule for your XenApp servers. Each reboot will ‘refresh’ the differencing disk, making them start from zero. Reboot your VDI VM on a daily basis or make them automatically reboot once a user logs of for example.

- Consider your underlying and supported Hypervisor when using MCS. For example, you can use NFS for XenServer and ESXi or clustered shared volumes for Hyper-V. However, No thin provisioning on XenServer with block based storage.

- Citrix used to recommend using NFS exclusively to go with MCS, but that was more geared towards the inability of XenServer to thin provision disks based on block based storage then anything else. While NFS will be more straightforward to configure and maintain, block based storage with MCS is also supported and used in many production environments as well.

- When you update the master VM /image of a persistent virtual machine, or multiple, only newly provisioned persistent VM’s will be able to use the updated master image. When dealing with non-persistent VM’s this works different. Once the new or updated image is assigned and the VM’s reboot, the old image will be discarded and the new one will be used from then on.

Wrap up

While MCS might seem straightforward to setup and manage, and it certainly can be, there are still a few of factors to consider. Of course all this is far from undoable, I just wanted to give you an idea on some of the bumps you might run into along the way, that’s all. Although I have never seen, managed or build one myself I have read about multiple large (thousands of VM’s) MCS based deployments running and being maintained without to much trouble, even without any of the earlier mentioned ‘more modern’ storage and/or Hyper Converged platforms in place.

With just a few ‘minor’ tweaks (although I am aware that this will probably take a lot more work then I can imagine) like a storage independent (in memory) read caching mechanism, some form of image versioning, the ability to move your VM’s including their disks / files across different storage platforms (I’m thinking DR or HA scenarios), I think MCS might eventually surpass PVS on many fronts. Because let’s face it, PVS is still the preferred platform in many to most cases and with good reason. But wait, this was not meat as a VS. article, so forget I said that.

Impatient? Go with Nutanix.

When you do not want to wait for Citrix to come up with a revamped version of MCS you might want to consider having a look at Nutanix. Using their NDFS file system it can offer a single data store to the whole cluster, at the same time keeping all data local to each cluster node and its running VM’s using Data Locality. And last but not least, leverage Shadow Clones, which are specifically designed for technologies like MCS to automatically cache a local master VM / image copy to each Nutanix node in the cluster, significantly improving overall performance.

3 responses to “Citrix Machine Creation Services… What to consider!”

Very nice article and summary of information, Bas. I would add that many SDS solutions can assist by not only allowing for huge storage saving for MCS by leveraging thin provisioning, but also by improving read cache. With over 400 units, we see read cache rates of typically 85-90%, which is fantastic when you think of all the more IOPS you can use for the more costly write cycles. This can be achieved without even using any SSDs for the data storage at all! A SDS solution can provide better results than IntelliCache or even the new XenServer 6.5 in-memory read cache in Enterprise and Desktop+ versions, which we have tested. All this is part of the storage setup, so nothing really needs to be done at all on the VDI side once set up. Good storage IOPS are critical to good performance!

MCS still needs PVS’ cache in RAM with overflow to disk mechanism and if (hopefully, when!) that happens, they are probably going to be very close in performance. We also agree that MCS needs a way to allow VM storage to be seamlessly migrated to other storage repositories (SRs) when hosted on XenServer; it’s odd to allow that to be possible for any other VM storage hosted on XenServer other than in a XenDesktop environment. Just because VMware doesn’t let you do this should not stop XenServer from being able to support this and end up a step ahead. Having the flexibility to support moving VMs to different SRs is also vital for disaster recovery situations.

-=Tobias

Thanks Tobias, noted :)

Glossary of Citrix Terminology and Other General Terms/Acronymns

This page lists the most common terms, acronyms, i