With all the excitement going on around Docker, and containerisation in general, including the latest rumours around Microsoft’s Nano Server, I thought it might be useful to have a closer look at this technology to see what it can and cannot do and when it might be a good time to actually start using it in a real world scenario.

Throughout this article I’d like to zoom in on the general and inner workings of Docker and discuss some of the announcements made around the 2016 Nano Server edition by Microsoft introducing Windows based containers into the Docker ecosystem. I’ve also included a few notes on VMware’s latest announcement around project Photon and Project Lightwave.

Open source

To give you a general idea on what Docker actually is and where it comes from I’ll let Wikipedia do some of the talking first… Docker is an open-source project that automates the deployment of applications inside software containers, by providing an additional layer of abstraction and automation of operating-system-level virtualization on Linux. Docker uses resource isolation features of the Linux kernel such as cgroups and kernel namespaces to allow independent “containers” to run within a single Linux instance, avoiding the overhead of starting virtual machines when that virtual machine lacks virtual machine forking technology.

A few gotcha’s

Docker only runs on Linux (at least for now) and as such only supports / runs Linux orientated workloads / applications. To be able to use Docker within your existing Windows environment you will need to run a Virtual Linux Machine hosting your Docker installation. For this you might want to have a look at Boot2Docker. Once set up, you will use the Windows Docker Client (CLI based) to control the virtualized Docker Engine to build, run, and manage your Docker containers. You can also use it to manage your (Linux) Docker hosts within your enterprise, directly from you windows machine.

To be clear, the Docker client mentioned above can be natively installed on Windows, it is a Windows application. The Docker binaries themselves only run on Linux, hence the VM mention earlier.

Docker is build-up out of several parts and elements, here they are. Parts:

- The server daemon, installed on the (Linux) server side.

- CLI, the command line interface to communicate with the server daemon, allows you to build, run, and manage your Docker containers. There is a Linux and Windows distribution available.

- Image index, a public or private repository holding, ready to use, Docker images.

Elements:

- Containers, a collection of various (Linux) kernel-level features needed to manage and control the applications running on top.

- Image, an image is probably best compared to a base operating system image used to run applications. It’s what ‘forms’ the container, it runs within the container and tells it what processes, services and/or applications to execute.

- DockerFiles, are used to create new Docker images. Each file holds a series of commands automating the image creation process.

Advantages…

Making use of containerized applications has several advantages.

- All applications get sandboxed and thus isolated from each other.

- Although containers rely and make use of the underlying Linux kernel (multiple containers share the same kernel) processes are isolated from each other by making use of so-called name-spaces (these are what actually create the container).

- Compute resources, like CPU, memory, IO traffic and certain network characteristics can be limited and controlled through cgroups (control groups).

- Containers are portable, they can easily be moved between systems, the only dependency is having the hosts tuned to run the containers, meaning that Docker needs to be installed. This goes for physical as well as virtual machines, including cloud deployments. This makes developing, testing and accepting new re-leases a breeze.

- Containers, or images (as you will soon find out) are built using layers, one building on top of the other, making, and keeping them lightweight in use. Because of this, creating snapshots and performing rollbacks when needed is very easily done as well.

- Containers are launched in seconds or less.

- They also help in achieving higher density, the ability to run more workloads on the same footprint.

- DevOps and containerization is a match made in heaven.

- It’s open source, anything can happen.

This comes from Docker.com:

One of the reasons Docker is so lightweight is because of these layers. When you change a Docker image, for example, update an application to a new version, a new layer gets built. Thus, rather than replacing the whole image or entirely rebuilding, as you may do with a virtual machine, only that layer is added or updated. Now you don’t need to distribute a whole new image, just the update, making distributing Docker images faster and simpler.

Some disadvantages…

- No Windows applications just yet.

- It brings complexity to your environment. Although Docker, and containers in general, hold(s) great potential, it isn’t ‘easy’ out of the box.

- Less isolation than a traditional virtual machine.

- Containers rely on, and need, the underlying Linux kernel, which introduces a potential security threat. Namespaces isolate the container, or application’s, view of the underlying Linux OS. However, not all kernel subsystems and devices are isolated and are shared by all containers. It will be interesting to see how Microsoft will cope with this, since they tend to be a lot more prone to virus attacks and such. Although the ‘Nano’ footprint, which is supposed to be extremely small, probably is a (really) good start. And let’s also not forget about the recently introduced Project Photon and Project Lightwave by VMware, see below, adding in additional security measures.

- Although containers are ‘lightweight’ when it comes to portability and distribution, the applications running in them may perform slightly less when compared to natively installed applications.

- Managing the network side of things, like static IP addresses for example, isn’t straightforward. Interaction between containers is also questionable with regards to security. Using technologies like VMware’s NSX (network virtualization) to manage the interaction between containers where needed, by micro-segmenting the existing network, creating tightly controlled VLANS, will add in an additional layer of security.

- It’s open source, anything can happen.

VMware

I already briefly mentioned Photon and Project Lightwave in the above section, but when you are done reading this article (it will make more sense then) make sure to check out VMware’s official announcement on this. Here’s a short excerpt…

Project Photon, a natural complement to Project Lightwave (see below) is a lightweight Linux operating system for containerized applications. Optimized for VMware vSphere and VMware vCloud Air environments,

Project Lightwave will be the industry’s first container identity and access management technology that extends enterprise-ready security capabilities to cloud-native applications. The distributed nature of these applications, which can feature complex networks of microservices and hundreds or thousands instances of applications, will require enterprises to maintain the identity and access of all interrelated components and users.

These two platforms (both are open source by the way) combined with the NSX technology mentioned earlier, introduce new and innovative ways of managing and securing containerized applications. Ok, back on topic, Docker containers, how do they work and what’s involved?!

Images, containers and Dockerfiles

Docker images are read-only templates from which containers are launched, or formed, once executed. The image file is what actually holds the configuration information. It can contain things like, files, directories, executable commands, environment variables, processes, tools, applications and more.

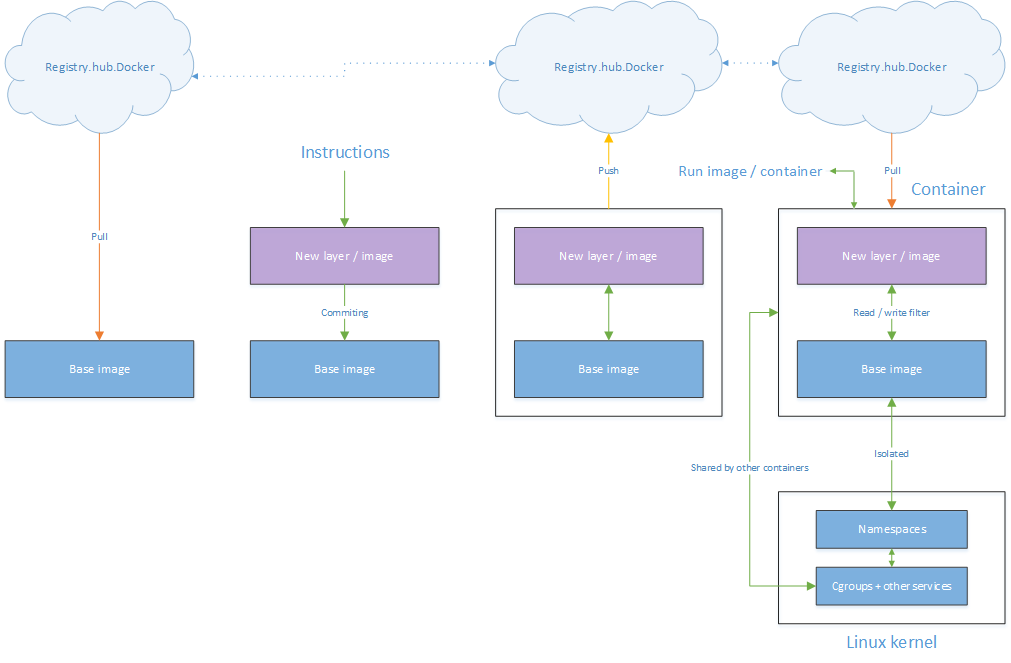

Docker images can be created manually with the use of a base image, images can be downloaded and directly launched (there are thousands) and finally existing images can be downloaded, altered, saved (which is actually called committing) and then executed. This may sound somewhat abstract at this point, but hopefully it will become (a bit more) clear in the next few minutes.

When manually building a new container you start out with a read only base image file (I’ll show you how and where to get these in a moment). These files form the foundation from where you then further build, or expand, the base image by issuing so called ‘instructions’ this way adding in specific configuration information like files, directories and so on and so forth, as mentioned above.

Each ‘instruction’ adds a new layer on top of your base image, which then needs to be ‘committed’ to save the configuration for later use. By committing these changes you basically create a new image file, which is linked to the base image file (differencing disk), and as such will only contain the differences between those two files. Theoretically this process can be repeated many times over adding in additional (layered) images on top of the base image file, however, it is recommended to keep the total amount of layers as low as possible. I’ve also tried to visualise this process, so if this does’t make sense at all, scroll down a bit.

Running you image / container

When launching your manually build image it will need the base image it was created from, or on top of, including (if applicable) any additional images in between. Your image, or images, will then be layered on top of the base image. When Docker runs / creates a container from an image, it will add a read-write layer on top of the image (using the layered file system as mentioned earlier) in which your application can then run. If it can’t find the image(s) it needs, it will automatically try to download it / them from the Docker hub. See the ‘pushing and pulling’ section a few paragraphs down for some more details.

As mentioned, base images are best compared to default operating-system disk images holding all necessary files and folders needed to run applications. Although running multiple processes / services / applications from a single container is possible, it is recommend and seen as an absolute best practice to run ‘just’ a single process / service per container. If that process or service depends on another service etc. you should use container linking.

Run image run…

When you ‘run’ or launch a container you basically tell Docker which image file to use to create the container. Once Docker sees and can access the associated image, it will use it to create the container around it (I think that’s a proper way to describe it), remember that the image file contains all the actual configuration information and tells Docker what service to run or which application / process etc. to launch. In short… It first needs all the associated image files to initiate the container creation process; next it will run the configuration information held by the image file(s) from the container.

As mentioned, when launching a container you need to specify, at a minimum, which image file to run to create the container, secondly, you also need specify a command to run. At least one command is mandatory. Keep this in mind.

Dockerfiles

When building an image you can take the manual approach and do everything by hand, one command, or instruction as we’ve learned earlier, at a time. Depending on what you are trying to achieve this might just wok fine. However, in most cases you would probably like to automate this process as much as possible. This comes from Docker.com: Docker can build images automatically by reading the instructions from a ‘Dockerfile’. A ‘Dockerfile’ is a text document that contains all the commands you would normally execute manually in order to build a Docker image. By calling ‘docker build’ from your terminal, you can have Docker build your image step by step, executing the instructions successively. Have a look here as well.

Pushing and pulling

So now that we have a general understanding of what Docker is, where it comes from and how containers and images ‘need’ each other, let’s have a look at where to get, and where to store our images.

Images are stored in so called Docker (hub) Registries. These can be local, to your company for example, of publically available on the Internet. https://registry.hub.docker.com is the primary one used by Docker. Another example is https://github.com/docker/docker-registry.

When a container is ran, remember that you will have to tell it (at least) which image to use including a command to execute, it will look for the image(s) it needs on the Docker host from where the container was initialized. If the image (or one of the images) needed to ‘form’ the container isn’t there ,Docker will automatically try to download the image, by default it will contact the Docker hub registry (registry.hub.docker.com) mentioned above.

Yes, you can run a container without having the all the necessary images local on your Docker host.

This process will slow down the initial container launch. To prevent this from happening we can pre-load images by downloading them from the Docker registry, or your own private (company) Docker registry / hub. This way the image(s) needed will be locally stored on the Docker host from where the container will be launched, speeding up the process.

Publically available images, as mentioned, are stored in online Docker registries. When we download an image this is actually called ‘Pulling’. When we create our own image, or build on top of an existing one, and we would like to make it publically available, we can upload it to the online, publically accessible Docker registry, this is called ‘Pushing’. The same principles apply when dealing with a private Docker, or company owned, registry.

It’s all about the kernel

Containers share the underlying Linux kernel and with it the hosts compute resources. However, by leveraging Linux kernel functionalities like cgroups and namespaces, resources can be isolated (CPU, memory etc.), services can be restricted and certain processes can be limited as well. There are however a bunch of kernel subsystems and devices that are not name-spaced and thus are shared among all containers active on the host, including cgroups. In the (visual) overview below I’ve also included the image build / change process as explained earlier.

Docker for Windows

As you might know or heard by now, Microsoft and Docker have announced a partnership in where they will bring the Windows server ecosystem into the Docker community. They describe four primary steps in achieving this, you can read all about it here. Of course all four steps are important in making this a success, some of which are already completed today, but the one I’m most interested in is the Docker engine for Windows introducing the Windows Server containers as of Windows Server 2016 (Nano). Once ‘live’ I’m sure we’ll see a mix of Linux and Windows containers emerge on various Windows platforms, including Azure. The integration of the Docker Registry Hub into Azure is another big step, and while going forward the Docker Registry Hub will also contain the announced Windows Server containers once ready.

General adoption

As it stands today (application) containerization is still more of a buzzword, or hype, then anything else. Although several companies have already started using application containers in production, some on large scale even, it is still far from mainstream. There is a lot of talk but not much action, at least not as far as I can see anyway, but maybe it’s different in the US for example? To be honest, a lot of companies, and I’m actually referring to their IT departments here, don’t even know about Docker, or containerization in general, just yet. Which isn’t that strange.

Now that Vmware has jumped into the game and with Microsoft betting heavily on their Nano release I’m positive we’ll see some changes not to long from now. Especially Microsoft, with their Windows version of Docker, will have a positive impact on the general adoption of containerization I’m sure.

Citrix

Although they primarily focus on virtualising the workspace and Windows applications in particular, with XenMobile being the exception, I’m keen to see if Citrix will get on board as well, now that VMware has with Photon and Lightwave. They too have an awesome cloud platform, which could be leveraged to do something similar. Perhaps they’ll wait it out, team up with Microsoft and surprise us as soon as Nano hits the shelves. Also, Synergy is right around the corner, so who knows what they might share. Let’s just wait and see. Their move. Update 29-04-2015 Shortly after publishing this article the new XenServer Dundee (Alpha 1) release came to my attention. It appears that one of the future XenServer releases will provide Docker support in the for of a Container management supplemental pack, which needs to be installed on the XenServer host. Also, once updated, XenCenter can be used to manage your containers. Sounds great!

Some of the reference materials used: www.quora.com, docker.com, wikipedia.com and vmware.com

One response to “Containers? Docker? Docker on VMware. Docker on Windows. Docker on Citrix?”

Good introduction to the docker world